How good is ‘Google Sites’ as a web hosting platform?

For my contribution to the Computer Measurement Group’s (CMG) yearly conference (CMG Las Vegas 2012) I am reviewing a number of webhosting options. One of the basic options is ‘Google Sites’, which is a content management system (CMS) with hosting and a content distribution network, all rolled into one. You can have a reasonable website running in a few minutes, just add content. It is sort of an alternative to blog hosting on wordpress.com or posterous.com. And it is free.

The obvious question then is: how good is it, and what kind of load will it sustain? First some basic results: one of my sites is hosted at Google Sites, and it failed 15 out of 8611 tests in June 2012, which is an uptime better than 99.8%. The average load time of the first request is under 900 milliseconds, though it differs a bit by location. The load time of the full page is a bit longer. This takes around 1.5 seconds to start rendering and 2.5 seconds to be fully loaded. See http://www.webpagetest.org/result/120706_VE_BTR/ for a breakdown of the site download.

A more interesting question is: how does it scale? Can it handle more load than a dedicated server?

A regular dedicated server will run at more than 100 requests/second. If a web page visit results in 10 requests, this means such a server will deliver at least 10 pageviews per second, which sounds good enough for a typical blog. Most vanity bloggers will be happy to have 10 pageviews per hour :-).

Here is what I did, step by step. I started by creating a page on a fresh domain at Google sites. With Jmeter I set up a little script to poll for that page. This script was then uploaded to WatchMouse, for continuous performance evaluation, and to Blazemeter for load testing. After an initial trial with a single server we fired 8 servers with 100 threads (simulated users) each.

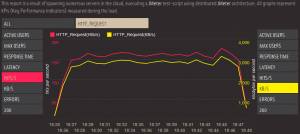

You can see the result in the next graph. You will see Google Sites easily handling over 150 requests per second, with a bandwidth of 3 Megabyte/second. Each request is a single HTTP request.

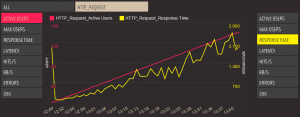

Interestingly, Google Sites does some kind of rate limiting, as we can see in the next picture. As the number of simulated users increases, the response time increases as well, already at low volumes. There is no ‘load sensitivity point’ indicative of resource depletion.

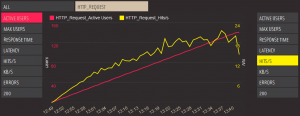

In the next picture you can see that the response rate just levels off.

In fact, it is even likely that Google Sites is rate limiting by source IP address. While this test was ran, the independent monitoring by WatchMouse showed no correlated variation.

Some final technical notes: If you want to maximize the requests/second you need lots of threads/simulated users with delays built-in. Jmeter is not good at simulating users that don’t have delays.

By the way, the site under test was served by more than 40 different IP addresses. The site has low latency to places around the world: for example locations in Ireland, China, San Francisco, Malaysia all have connect times less than 5 milliseconds. This substantiates the statement that Google Sites is using some kind of CDN.